Count模块源码解读

计数的基本方法是确定边的数量,通过子图完全图边和点的关系来计算点数,最后得到答案

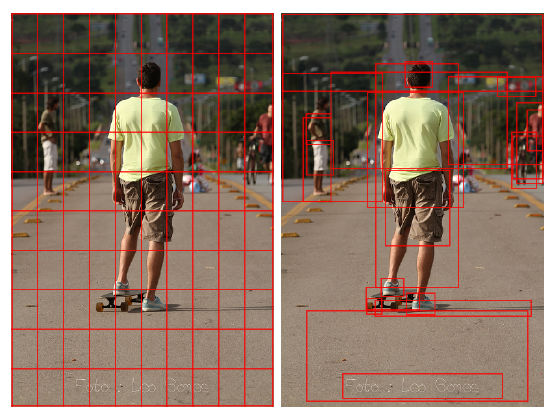

top n bounding box

首先处理出处理出top n的注意力权重和对应的bounding box

1 | def filter_most_important(self, n, boxes, attention): |

boxes的shape为(n, 4, m),attention的shape为(n, m),其中n为batch size,m为一张图中bbox的数量

1 | torch.topk(input, k, dim=None, largest=True, sorted=True, out=None) → (Tensor, LongTensor) |

k表示top k,dim为指定维度,如果未指定则默认最后一维,largest表示返回最大还是最小(True/False),sorted表示返回的top k是否需要排序,out可选输出张量(Tensor, LongTensor)

返回值是一个元组(values,indices)

源码中指定的dim=1是bbox维度,即选取attention权值最大的k个bbox

1 | torch.unsqueeze(input, dim) → Tensor |

unsqueeze用于维度扩充,dim为插入的维度下标

expand是将torch的各个维度扩展到更大的尺寸,在unsqueeze之后,idx变为(n,1,m),然后扩展为(n,4,m),和bbox的tensor尺寸相同

1 | torch.gather(input, dim, index, out=None, sparse_grad=False) → Tensor |

gather函数在dim维度按照index对input进行索引把对应的数据提取出来的,这里是提取出top k的attention权值对应的bbox

Calculate A && D

然后计算注意矩阵和距离矩阵

1 | relevancy = self.outer_product(attention) |

看下矩阵外积(outer_produce)的实现

1 | def outer(self, x): |

(x.size()[-1],)表示将x.size()的最后一维取出并将其表示为元组,元组相加是拼接而非按位相加,得到size为(n,m,m),所以outer的作用就是将x变成两个(n,m,m)的tensor,将权值放在对应的倒数第一维和倒数第二维,outer_product将x转化后得到的a和b相乘得到外积

看一下Intersection over Union(IoU)的实现

1 | def iou(self, a, b): |

先看一下 intersection

1 | def intersection(self, a, b): |

a[:, :2, :]表示将dim=1的前两个切片(左上角坐标)取出,对应的a[:, 2:, :]则为右下角坐标

1 | torch.max(input, other, out=None) → Tensor |

torch.max可以将取两个tensor对应位置的最大值,返回是一个tensor,两个tensor不一样大时可以广播计算,不过这里源码直接将其拉伸成一样大的了

将左上角取最大值就得到了相交区域的上界,同理,右下角取最小值得到了相交区域的下界

1 | torch.clamp(input, min, max, out=None) → Tensor |

clamp给tensor的元素限定上下界(min,max),如果两个bbox没有交集,则可能计算出来的是负数,所以需要设定下界0,将两个inter对应的长宽相乘得到每两个bbox的相交面积area,得到面积交矩阵

然后看一下area

1 | def area(self, box): |

显然计算的是bbox的面积,同时用了clamp做了极小限制防止出现负数

在iou中将得到的area通过unsqueeze在矩阵上横向扩展和纵向扩展,相加再减去面积交矩阵即可得到面积并矩阵,IoU = 面积交/面积并,计算即可

然后用得到的注意力矩阵A和距离矩阵D去计算$\bar{A}$

intra-object dedup

1 | score = self.f[0](relevancy) * self.f[1](distance) |

将A和D通过两个不同的激活函数,然后点乘来消除intra-object

来看一下激活函数的实现

在__init__里有

1 | self.f = nn.ModuleList([PiecewiseLin(16) for _ in range(16)]) |

查看作者写的分段线性函数PiecewiseLin

1 | class PiecewiseLin(nn.Module): |

cumsum是累加

1 | torch.cumsum(input, dim, out=None, dtype=None) → Tensor |

返回的tensor y满足$y_i=x_1+x_2+\dots+x_i$

通过w=w/w.sum()和self.weight.data[0] = 0保证$f_k(0)=0,f_k(1)=1$

inter-object dedup

然后消除inter-object,方法是等比例缩小边的权重

1 | dedup_score = self.f[3](relevancy) * self.f[4](distance) |

dedup_score就是计算了式中的X,$X = f_4(A)\odot f_5(D)$

看一下deduplicate函数

1 | def deduplicate(self, dedup_score, att): |

其中用了一个outer_diff函数

1 | def outer_diff(self, x): |

和outer_product的功能类似,用这个函数求了attention的两两权重差和dedup_score的两两差,按照式子计算了proposal两两之间的相似度

1 | torch.prod(input, dim, keepdim=False, dtype=None) → Tensor |

prod是对指定维度dim求元素积,对应公式中的$\prod$

$row_sims$计算了proposal i和其它proposal相似的次数

对于$edge_{ij}$来说值需要除以${row_sims}_i$和${row_sims}_j$,所以这里处理了$row_sims$的$outer_product$,即$all_sims$

然后对score进行了缩放,即得到了$\bar{A}\odot ss^T$

aggregate the score

答案统计用的是$|V|=\sqrt{|E|}$,因此还需要将在intra-object dedup中消去的自环加回来

1 | correction = self.f[0](attention * attention) / dedup_per_row |

算自环直接attention点积然后除倍率,矩阵点积对于边权值是线性变化,所以乘s而不是乘$s\odot s$,原式中diag是将数值填入对角矩阵的对角线,因为代码中是直接计数所以不需要这步操作

直接求和得到了|E|,开方即可得到|V|,也就是正确的计数

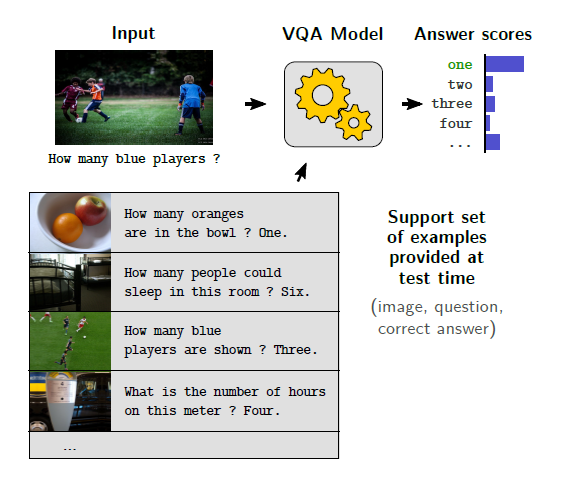

并将答案转化为特殊的one-hot编码$o=[o_0,o_1,\dots,o_n]^T$

$o_i=max(0,1-|c-i|)$

若答案为整数,则one-hot编码结果为对应答案位置为1,其余位置为0,否则o对应两个one-hot编码的线性插值

1 | def to_one_hot(self, scores): |

最后考虑尽可能用A和D矩阵中为1和0的数字来计算答案,计算两个置信系数,然后返回最终的计数特征

1 | one_hot = self.to_one_hot(score) |